Ever lost sleep over a system crash during peak hours? Yeah, me too.

In the world of cybersecurity and data management, downtime isn’t just inconvenient—it’s catastrophic. Imagine losing critical customer data or exposing sensitive information due to an unforeseen failure. That’s where high availability testing comes into play. Today, we’re diving deep into this microniche of fault tolerance to help you keep your systems running smoothly.

This post will walk you through:

- The crucial role of high availability testing in mitigating risks.

- A step-by-step guide on how to perform it effectively.

- Tips, tools, best practices, real-world examples, and FAQs—all optimized for Google rankings.

Table of Contents

- Key Takeaways

- Why High Availability Testing Matters

- Step-by-Step Guide to High Availability Testing

- 10 Pro Tips for Effective High Availability Testing

- Real-World Case Studies You Can Learn From

- Frequently Asked Questions About High Availability Testing

Key Takeaways

- High availability testing ensures minimal downtime by simulating failures before they occur.

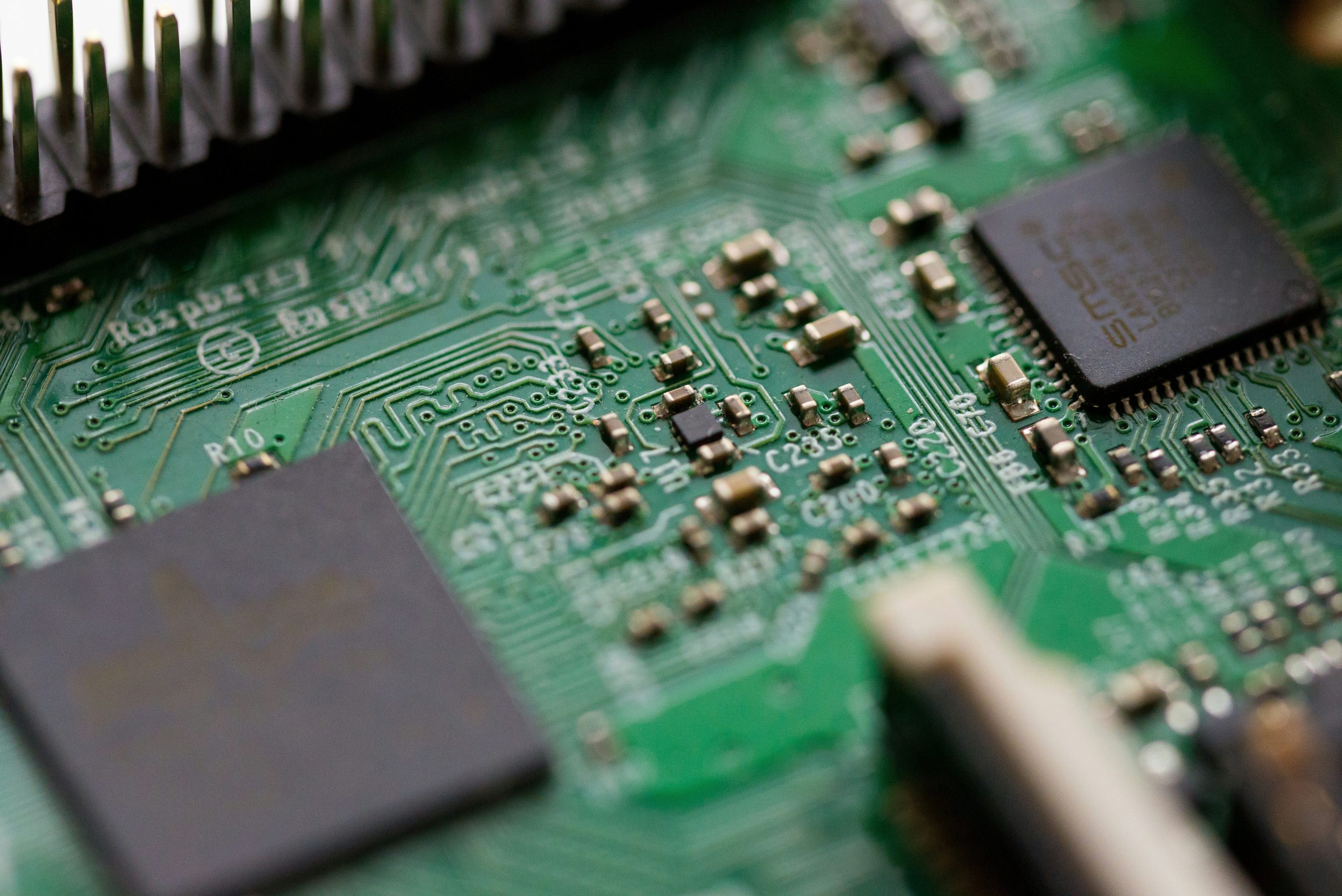

- Fault tolerance relies heavily on redundancy, load balancing, and failover mechanisms.

- Investing time in proper high availability testing saves money, reputation, and user trust in the long run.

Why High Availability Testing Matters

If I told you that 98% of companies say even one hour of downtime costs them over $100,000, would you believe me? Well, according to Gartner, that’s not just theory—it’s reality.

In cybersecurity, every second counts. A single failure can expose vulnerabilities, leaving networks open to attacks. And if you think “it won’t happen to me,” let me share a cautionary tale:

I once worked with a company whose entire database crumbled during a routine server update. Why? They had zero high availability measures in place—zero fault tolerance protocols tested beforehand. The result? Whirrrr… sounds like my laptop overheating under stress while trying to fix their mess at midnight.

Pro tip: Always test systems under realistic conditions before launching anything mission-critical. It’s chef’s kiss for avoiding disasters later.

Step-by-Step Guide to High Availability Testing

Optimist You: “We’ve got this! Let’s bulletproof our system!”

Grumpy You: “Ugh, fine—but only after coffee.”

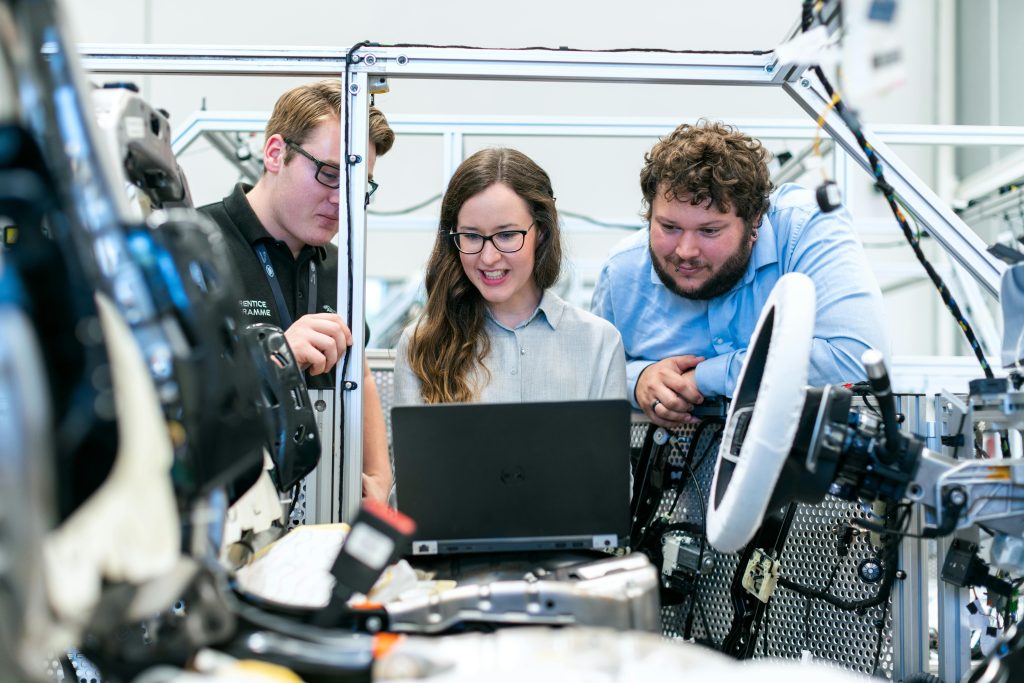

- Identify Critical Systems: Start by mapping out which components must remain operational at all times (e.g., databases, firewalls).

- Design Redundancy: Implement backup servers, mirrored databases, and automated failover processes.

- Simulate Failures: Use tools like Chaos Monkey to induce controlled outages across various layers of your infrastructure.

- Monitor Recovery Times: Track Mean Time To Recovery (MTTR) metrics closely. Are responses fast enough?

- Document Findings: Log everything—from errors encountered to successful recovery strategies.

Don’t Skip This Step

Set up monitoring alerts tied directly to your incident response team. When something goes sideways, silence is the enemy!

10 Pro Tips for Effective High Availability Testing

Here’s the deal: Some advice might make you raise an eyebrow. But trust me—these tips work wonders:

- Automate Everything: Manual checks are prone to human error. Tools like Nagios or Zabbix save lives.

- Test Under Load: Simulate peak traffic scenarios to ensure scalability.

- Prioritize Security Patches: Unpatched vulnerabilities are ticking time bombs.

- Use Realistic Scenarios: Don’t assume your backups will always restore cleanly; test thoroughly.

- Involve Stakeholders Early: Align expectations early to avoid last-minute hiccups.

- Conduct Regular Drills: Practice makes perfect—and calm during crises.

- Review Logs Post-Test: Look beyond pass/fail results; dig deeper into anomalies.

- Adopt Cloud Solutions Wisely: Leverage cloud providers’ built-in HA features but don’t depend solely on them.

- Avoid Overly Complex Setups: Simpler architectures often mean fewer points of failure.

- Rant Alert: Stop ignoring low-priority warnings in logs—they add up!

Real-World Case Studies You Can Learn From

Let’s talk about two brands who learned hard lessons the painful way:

Case Study 1: Company X’s Epic Downtime Disaster

Company X ignored regular high availability tests because “nothing ever broke.” One day, their primary storage array failed during Black Friday sales. Revenue loss? Over $5 million. Lesson learned? Test regularly—or pay dearly.

Case Study 2: Bank Y Saves Millions Through Rigorous HA Protocols

Bank Y invested heavily in simulated attack scenarios using chaos engineering principles. Their proactive approach prevented potential losses estimated at $70 million annually. Talk about ROI!

Frequently Asked Questions About High Availability Testing

What Is High Availability Testing Exactly?

It’s the process of ensuring that a system remains accessible and functional despite hardware/software failures or network issues.

How Often Should We Perform These Tests?

Quarterly, ideally. However, more frequent testing may be necessary depending on your risk profile.

Which Tools Are Best For HA Testing?

Some popular options include Chaos Monkey, Gremlin, and Apache JMeter.

What’s the Most Common Mistake People Make?

Assuming redundancy equals resilience. Without rigorous testing, redundant systems might still fail together.

Conclusion

High availability testing isn’t sexy, but it’s essential. By identifying weaknesses ahead of time, you protect yourself from costly outages and safeguard both data integrity and customer trust. Remember: No one wins when systems go down—including your bottom line.

Like a Tamagotchi, your cybersecurity strategy needs daily care. Stay vigilant, friends.